LLM Model Merging

In this article, I will share my views on the innovative method of model blending techniques and algorithms, and discuss the results of my model blending experiments, which have been published on the huggingface open-llm leaderboard. I will provide my personal insights and analysis on this topic. To jump to the result, go to the bottom section.

Background

Towards the end of last year, the idea of model merging rapidly gained popularity in the research community with the publication of several merging techniques, followed by the anonymous release of a large model by Mixtral.AI via torrent.

This model, now known as Mixtral 8x7B, is a high-quality sparse mixture of expert (MoE) models. This has sparked significant interest in model merging and stacking, as these models have been shown to achieve very high benchmark scores. In this article, I will delve into the details of these developments and provide my analysis of the current state of model merging research.

Mistral.AI — Mixtral 8x7B results speak for themself, they are very good, see chart below on their performance benchmark published by Mixtral AI.

Honestly, the net result is truly remarkable for following reason:

- It’s an open-source model that can outperform a commercial product like GPT 3.5 on five benchmarks

- It can outperform a 10 times larger model like Llama 70B in benchmarks

- It perform well in multilingual tests

Tell me more about Mixtral 8x7B?

The Mixtral 8x7B model is not a standalone model, but rather a stack of eight 7B-models, specifically a sparse Mixture of Experts (MoE) Language Model with the same family and number of layers, which has been optimized through both supervised fine-tuning and direct preference optimization (DPO). The size of this model is roughly equivalent to a 13B model. For more detailed information, please refer to the following paper: [https://arxiv.org/pdf/2401.04088.pdf].

How does the MoE works?

Essentially, it utilises an array of smaller, rapid 7B models in place of a singular large model, ensuring both speed and efficiency in processing.

Then a router network selectively engages 2 experts per token at each layer, allowing access to 47B parameters while actively utilizing 13B during inference. This approach, combined with a 32k token context size, optimizes performance and efficiency.

The art of model blending techniques

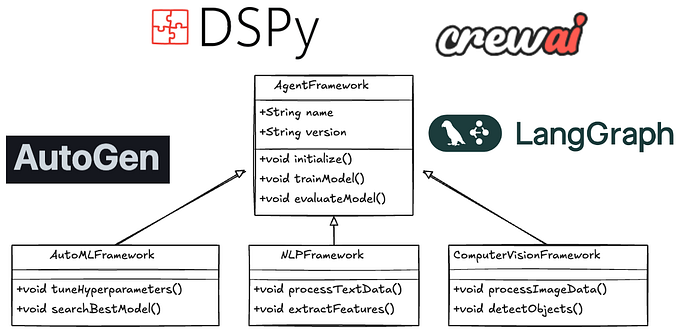

Roughly speaking I think we can group them into 3 categories:

- Model Fine Tuned Adapter

- Mixture of the Experts

- Model Merging

Model Fine-tuned adapter

— the parameters of the original network are frozen and therefore may be shared by many tasks. Adapter modules have two main features: a small number of parameters, and a near-identity initialization.

LoRA (Low Rank Adaptation) is a fine-tuning technique that enhances the efficiency of pre-trained large language models. Instead of fine-tuning all the weights in the weight matrix, LoRA introduces two smaller matrices that approximate the larger matrix, which are then fine-tuned. These matrices form the LoRA adapter. Once fine-tuned, the adapter is loaded into the pre-trained model and used for inference.

QLora takes LoRA’s memory efficiency a step further by loading the pre-trained model to the GPU memory with 4-bit quantized weights. This method, combined with a specific domain task dataset, enables the training of domain or task-specific adapters. These adapters can be loaded into the base-model as needed, making QLora an even more memory-efficient solution.

See list below for list of specific examples:

Finetune LLM to convert a receipt image to json or xml

Fine-tuning LLM Model (Llama2) for inverse information generation

Build a specialized Llma-2 model for product brand recommendation

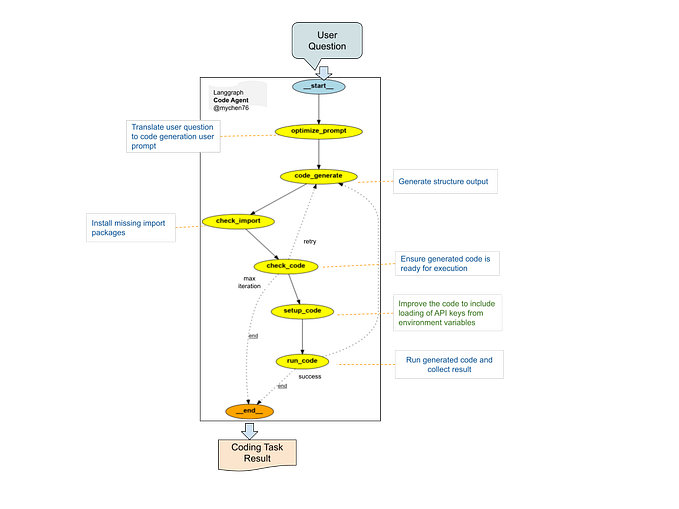

2. Mixture of the Experts

This approach involves merging the self-attention and layer normalization parameters from a “base” model with the MLP (Multi-Layer Perceptron) parameters from a collection of “expert” models.

By doing so, it is possible to integrate Mistral or Llama models of the same size into a Mixture of Experts model. It is recommended to use a fine-tuned model from the same model family and with the same number of layers as the base model to achieve optimal results and maintain consistency.

For example, I could stack up a set of models each with own specialization then combined them as MoE model and set a positive prompt as hints to the router on runtime activation see sample config below:

base_model: mlabonne/Marcoro14-7B-slerp

experts:

- source_model: openchat/openchat-3.5-1210

positive_prompts:

- "chat"

- "assistant"

- "tell me"

- "explain"

- source_model: beowolx/CodeNinja-1.0-OpenChat-7B

positive_prompts:

- "code"

- "python"

- "javascript"

- "programming"

- "algorithm"

- source_model: maywell/PiVoT-0.1-Starling-LM-RP

positive_prompts:

- "storywriting"

- "write"

- "scene"

- "story"

- "character"

- source_model: WizardLM/WizardMath-7B-V1.1

positive_prompts:

- "reason"

- "math"

- "mathematics"

- "solve"

- "count"

tokenizer_source: union3. Model Weight Merging (or Blending)

Blending is all you need: https://arxiv.org/pdf/2401.02994.pdf

Combining pre-trained language models is a cutting-edge, resource-efficient method that utilizes an out-of-core approach for model merges, requiring fewer resources compared to fine-tuning models using only a CPU or with GPU acceleration. Based on my personal experience, performing model merges using a CPU alone is quite responsive and effective.

Model Layer Overlap and Layer Size

Here’s the fun part of the model merging, you can take a piecemeal approach on selecting slices of the model layers from each model in your selection list.

Example-1,

Model-1 layers [0,8]

Model-2 layers [10,20]

Model-3 layers [20,30]

Model-4 layers [30,32]However, stacking layers from multiple models doesn’t not yield the optimal result. The recommendation here is to have some overlap between layers.

Example-1 revised with overlap

Model-1 layers [0,8]

Model-2 layers [4,12]

Model-3 layers [8,16]

Model-4 layers [12,20]

Model-5 layers [14,22]

Model-6 layers [18,26]

Model-7 layers [22,30]

Model-8 layers [26,32]Another point is that the selection model layer size should be the same size. Mixing architecture could result in unexpected responses.

Merging Algorithms and Configuration

Linear

The classic merge method — a simple weighted average. it also normalize the weights of all models contributing to a tensor .

SLERP

Spherical Linear Interpolation, also known as SLERP, is a technique used to smoothly transition between two vectors while maintaining a consistent angular velocity. The idea focuses on the change in direction of the weights, which frequently carry more significant meaning like feature learning and representation rather than the magnitude of the change.

By using SLERP, it is possible to merge modes in a way that respects the distinct qualities and curvature of each original model, even in high-dimensional spaces.

Here’s a classic SLERF configuration,applied to every layer of both models. The parameters for the self-attention and MLP layers will use different combinations while other layers are a 50/50 mixture of these models.

slices:

- sources:

- model: my-model-1

layer_range: [0, 32]

- model: my-model-2

layer_range: [0, 32]

merge_method: slerp

base_model: my-base-model

parameters:

t:

- filter: self_attn

value: [0, 0.5, 0.3, 0.7, 1]

- filter: mlp

value: [1, 0.5, 0.7, 0.3, 0]

- value: 0.5

dtype: bfloat16This method limits 2 models only at a time. One possible way to work around this is to chain them on the result model with a new model.

the algorithm pseudo code works as follow:

Normalize the input vectors to unit length,

Calculate the angle between these vectors using their dot product

defaults to linear interpolation if the vectors are nearly collinear,Otherwise, SLERP computing scale factors based on the interpolation factor t-parameter and the angle between the vectors.

These factors are used to weigh the original vectors, which are then summed to obtain the interpolated vector.

Task Arithmetic

Task arithemtic: https://arxiv.org/pdf/2212.04089.pdf

This method introduces the approach to compute “task vectors” for each model by subtracting a base model. These vectors represent directions in the weight space of the pre-trained model, pointing toward improved performance on a specific task.

So merges the task vectors linearly and adds back the base. Works great for models that were fine tuned from the same base model see below:

- Negating a task vector decrease model performance

- Adding task vectors can enhance multi-task simultaneously

- Combined task vector from relative tasks can improve performance

TIES

Ties-merging was introduce on this research paper: https://arxiv.org/pdf/2306.01708.pdf

This method aim to address two challenges in model merging:

1. Model Parameter Redundancy

2. Conflict due disagreement between parameter signs

It’s building on the task arithmetic framework, it resolves interference between models by sparsifying the task vectors and applying a sign consensus algorithm. It efficiently merges multiple task-specific models into a single multitask model while retaining more of their strengths.

Example configuration:

models:

- model: MODEL-1-BASE

- model: MODEL-2

parameters:

density: 0.5

weight: 0.5

- model: MODEL-3

parameters:

density: 0.5

weight: 0.3

merge_method: ties

base_model: MODEL-1-BASE

parameters:

normalize: true

dtype: float16In this configuration, we merge Model-2 and Model-3, allocating 50% of the weight to Model-2 and 30% to Model-3, with normalization applied. We apply a density value of 50%, meaning that only 50% of the parameters in each model are retained, while the remaining parameters are sourced from the base model. According to experimental results, density values ranging between 0.5 and 0.6 have proven to yield meaningful results for Mistral and Llama family models.

the algorithm pseudo code works as follow:

- Trim parameters only retain high density parameters (or significant)

- Resolve sign conflict based on most dominant direction

- Average parameters values that align with unified sign

DARE

Dare merge research paper: https://arxiv.org/pdf/2311.03099.pdf

This method adopts the TIES (Token-level Interference-based Expert Selection) approach to sparsify task vectors, thereby minimizing interference. However, instead of using the TIES pruning method, DARE (Dynamically Allocated Relevance pruning) employs random pruning and a unique rescaling technique to better align with the performance of the original models.

DARE can be implemented with either the TIES sign consensus algorithm (dare_ties) or without it (dare_linear). In both cases, DARE rescales the weights to maintain the expectations of the model outputs approximately consistent. Here’s same merge config:

models:

- model: MODEL-1-BASE

- model: MODEL-2

parameters:

density: 0.6

weight: 0.4

- model: MODEL-3

parameters:

density: 0.6

weight: 0.3

- model: MODEL-4

parameters:

density: 0.6

weight: 0.3

merge_method: dare_ties

base_model: MODEL-1-BASE

parameters:

int8_mask: true

dtype: bfloat16This is an example configuration of merging 3 models using dare_ties. My model merging experiment result shows this method to achieve the best score in open-llm benchmarks.

Passthrough

The passthrough technique involves transmitting input tensors without modification during layer-stacking type merges, resulting in a large single input model for creating a mega-merging model of unconventional size. For instance, Goliath-120b can be constructed using two Llama-2 70B models in a passthrough configuration.

Here’s an example config:

slices:

- sources:

- model: MODEL-1

layer_range: [0, 32]

- sources:

- model: MODEL-2

layer_range: [24, 32]

merge_method: passthrough

dtype: bfloat16On this config we merge two models by taking 32 layers from model-1 and another 8 layers from the second model which result in 40 layers model and 8.99B parameters.

4. Model Ensemble

The traditional technique of model ensemble combines the outputs of multiple models to enhance overall system performance. This is not a model merging technique, rather it combines the top-ranked candidates to produce an enhanced output to enhance overall system performance at inference time.

5. Combine LLM of Mix Architecture

The ideal outcome in the model merging techniques discussed in Sections 2 (MoE) and 3 (model weight merging) is to use the same model architecture. However, when merging models with mixed architectures, the paper “Knowledge Fusion Model” (https://arxiv.org/pdf/2401.10491.pdf) introduces new techniques.

The concept behind this approach is to create a unified model by leveraging the collective capabilities and unique strengths of various Large Language Models (LLMs).

7. My Model Merging Experiments

To verify the model merging techniques, I have developed several merging models, which can be found and explored in the Open LLM Leaderboard.

You can find my Moe & model merging collection here.

Based on my test result, one model merging algorithm stand out is DARE. This is the model LLM benchmark result link.

| Metric |Value |

| - - - - - - - - - - - - - - - ----------

|Avg. |73.46|

|AI2 Reasoning Challenge (25-Shot)|69.62|

|HellaSwag (10-Shot) |87.04|

|MMLU (5-Shot) |65.18|

|TruthfulQA (0-shot) |66.98|

|Winogrande (5-shot) |80.58|

|GSM8k (5-shot) |71.34|Result-1: the score is better than NouseResearch Mixtral-8x7B-DPO.

Result-2: in 4 test scores it beats the Qwen-70B model.

Also uploaded a Mixtral 6x7B-GGUF quantized version here.

One question in mind — is how many model can we stack together? and what is the limit? well, someone did tried put together a total of 14 models here: EmbeddedLLM/Mistral-7B-Merge-14-v0.1. Technical speaking a merged model can be use as based for new merging model, this allow continue blending and mixing of models overtime :-)

Runtime Inference Cost

In general, model merging is a cost-effective and time-saving approach.

Lora Adapters utilize the same runtime base model, they are loaded on-demand, making it more efficient in resource utilization. According to the author of Lora, with 25 open-source adapters, fine-tuned LLMs that outperform GPT-4 can be served on a single GPU.

Another efficient approach is MoE (Mixture of Experts), which uses small and efficient models to maximize efficiency on specific tasks, similar to Adapters, but without the training cost

Caveats

Despite being an emerging field, model merging is progressing rapidly towards a mature state. However, it faces similar disadvantages as other methods. For instance, since the selection of layers for merging can be arbitrary, there might be significant differences between the merged weights and the original parameters, leading to unpredictable outcomes.

Mix Architecture methods are still in the experimental stage.

Continuously blending and mixing models can easily lead to a loss of control over the model. In my opinion, an alternative approach is to fine-tune models using one’s own domain dataset, with visibility in changes and tracking in data lineage. This approach offers finer control over the model’s domain-specific tasks.

In summary

I am highly impressed with the outcomes of the innovative model merging techniques. It is an excellent approach to further enhance pre-trained models that belong to similar families or share common ancestors.

I hope you find this useful. If yes, click clap :-)

Thanks for reading, have a nice day!

References:

Open LLM Leaderboard https://huggingface.co/spaces/HuggingFaceH4/open_llm_leaderboard

Benchmarks

Arc benchmarks https://deepgram.com/learn/arc-llm-be... https://arxiv.org/pdf/1803.05457.pdf

HellaSwag https://arxiv.org/pdf/1905.07830.pdf

MMLU https://arxiv.org/pdf/2009.03300.pdf

TrithfulQA https://arxiv.org/abs/2109.07958

WinoGrande https://arxiv.org/pdf/1907.10641.pdf

GSM8K https://arxiv.org/pdf/2110.14168.pdf

Research Paper

Merging models with different architectures: https://arxiv.org/pdf/2401.10491.pdf

merging models different arch: https://github.com/18907305772/FuseLLM

Blending is all you need: https://arxiv.org/pdf/2401.02994.pdf

Ties-merging research paper: https://arxiv.org/pdf/2306.01708.pdf

Dare merge research paper: https://arxiv.org/pdf/2311.03099.pdf

Task arithemtic: https://arxiv.org/pdf/2212.04089.pdf

Merging Kit: https://github.com/arcee-ai/mergekit

LazyMergekit: https://colab.research.google.com/drive/1obulZ1ROXHjYLn6PPZJwRR6GzgQogxxb?usp=sharing#scrollTo=1Wq4SB9A_9ic